Blog

Here is a little press review mostly around Oracle technologies and Solaris in particular, and a little lot more:

READ_ME_FIRST: What Do I Do With All of Those SPARC Threads?

With an amazing 1,536 threads in an Oracle M5-32 system, the number of threads in a single system has never been so high. This offers a tremendous processing capacity, but one may wonder how to make optimal use of all these resources.

In this technical white paper, we explain how the heavily threaded Oracle T5 and M5 servers can be deployed to efficiently consolidate and manage workloads using virtualization through Oracle Solaris Zones, Oracle VM Server for SPARC, and Oracle Enterprise Manager Ops Center, as well as how to improve the performance of a single application through multi-threading.

Linux Container (LXC) — Part 2: Working With Containers

Part 1 of this article series provided an overview about the Linux container technology. This second part intends to give you an impression on how to work with containers, by showing a few practical examples. These can be easily followed and reproduced on an up to date Oracle Linux 6 system. For the first steps, it is recommended to install Oracl Linux inside a virtual environment like Oracle VM VirtualBox. Oracle provides a pre-installed and pre-configured Oracle Linux 6 Virtualbox image for free download from the Oracle Technology Network (OTN).

How to Get Best Performance From the Oracle ZFS Storage Appliance

These articles (a seven-part series) provides best practices and recommendations for configuring VMware vSphere 5.x with Oracle ZFS Storage Appliance to reach optimal I/O performance and throughput. The best practices and recommendations highlight configuration and tuning options for Fibre Channel, NFS, and iSCSI protocols.

The series also includes recommendations for the correct design of network infrastructure for VMware cluster and multi-pool configurations, as well as the recommended data layout for virtual machines. In addition, the series demonstrates the use of VMware linked clone technology with Oracle ZFS Storage Appliance.

IBM upgrades Flex Systems line

IBM on Tuesday rolled out a series of new Flex Systems that are designed to pack more computing power in tight data centers for cloud services.

IBM Opens POWER Technology for Development

IBM, along with Google, Mellanox, NVIDIA and Tyan, has announced that it will form a new consortium called OpenPOWER. The OpenPOWER Consortium will leverage IBM’s proven POWER processor to provide an open and flexible development platform aimed at accelerating the rate of innovation for advanced, next-generation data centers.

Back in time - or: zpool import -T

Sometimes you find out interesting stuff just by Google and trying something out. Yesterday i've searched for some information for ZFS on FreeBSD (one the OSes i keep in my OS zoo) and in the course of it, i found a mail on a FreeBSD list talking about the command zpool import -T . -T? There is no -T option on zpool import? Or is it just in FreeBSD?

MISYS, Kondor et les Sparc T5 sécurisent les transactions bancaires

Misys est un éditeur majeur dans l’édition de progiciels bancaires, notamment autour des solutions de trading et de risk management. Nous représentons plus de 60% de ce marché dans le monde. Nous sommes présents dans les 4 banques institutionnelles françaises. [...]

Nous menons actuellement une mission de refonte d’architecture chez un grand client bancaire que nous ne citerons pas. L’existant, c’est un parc hétéroclite de serveurs SPARC/Solaris sur lesquels tourne l’application KONDOR.

mirroring progress in AIX

In the past, I posted this "snippet" as the solution to often asked question – how much mirroring is done? Today, I had an opportunity to use it. Man, it works like charm!!! Such a small thing but so much joy :-) Oh boy, I am overdoing it for sure...

Flash geometry and performance

Last year StorageMojo interviewed Violin Memory CEO Don Basile. He noted that as flash features sizes shrank, NAND would get slower as well as reducing endurance.

IBM Forms OpenPower Consortium, Breathes New Life Into Power

Back in July, I started what will eventually be a series of stories on what IBM should be doing with its systems business. And like a bolt from the blue, the company did something that I have been mulling for the past several months in earnest and noodling for the past several years off and on. And what IBM has done is to mimic the ARM collective and open up the intellectual property surrounding its Power chips to help foster a broader ecosystem of users and system makers.

IBM To FTC: Make Oracle Stop Running Those Mean Server Ads Please

Oracle is back in lukewarm water over a line of ads that say its own Sparc and Exadata servers are between two and 20 times better than IBM's Power Systems servers. This time, instead of getting another slap on the wrist from an independent industry board that oversees advertising in the U.S, it could get a slap on the wrist from the Federal Trade Commission itself. It's time for IBM, which called Oracle "a serial offender," to take matters into its own hands.

Exploring Installation Options and User Roles

- http://www.oracle.com/technetwork/articles/servers-storage-admin/solaris-install-borges-1989211.html

Part 1 of a two-part series that describes how I installed Oracle Solaris 11 and explored its new packaging system and the way it handles roles, networking, and services. This article focuses first on exploring Oracle Solaris 11 without the need to install it, and then actually installing it on your system.

The life of a Linux RPM (package)

Another frequently asked question related to Oracle Linux is how versions of specific packages (RPMs) are picked.

Re-installing the IBM PureFlex™ System and IBM Flex FSM

So you have broken the FSM in you IBM Flex or IBM PureFlex™ System, either it won't power on any more, or its just failing to start. In my case after a software update it wouldn't boot up correctly and some how the root user had been corrupted, so this meant that I couldn't get access to the system. Below I'll go through the steps I took to get the software re-installed from the 'recovery' section of the internal disk on the FSM node.

Setting up a InifniBand Network on IBM POWER AIX

In this doc I'm going to cover the considerations and set-up of a infniband network, so you will need to pre plan the number of systems that you need and the bandwitch of the adpater you plan to use.

ZFS/SLOG on SAN

What if you have ZFS deployed on SAN in a clustered environment and you require a dedicated SLOG?

It was never easier than today - Upgrading/Patching Solaris Cluster 4 and Solaris 11

- step: "scsinstall -u update" on all cluster nodes, while the system is running

- step: "init 6" one node by one to perform a rolling upgrade.

That looks easy, doesn't it?

Less known Solaris 11.1 features: pfedit

It's a really nifty feature: Let's assume, you have a config file in your system and you want to allow your junior fellow admin to edit it from time to time, but don't want him to pass any further rights to him, because this machine is too important.

Solaris 11.1 has an interesting feature to delegate the privilege to edit just a file. The tool enabling this is called pfedit.

Memory Leak (and Growth) Flame Graphs

Your application memory usage is steadily growing, and you are racing against time to fix it. This could either be memory growth due to a misconfig, or a memory leak due to a software bug. For some applications, performance can begin to degrade as garbage collection works harder, consuming CPU. If an application grows too large, performance can drop off a cliff due to paging (swapping), or the application may be killed by the system (OOM killer). You want to take a quick look before either occurs, in case it’s an easy fix. But how?

Less known Solaris 11.1 features: Auditing pfedit usage

You have allowed junior to edit the httpd.conf and and some nice evening, you are sitting at home. Then: You get alerts on your mobile: Webserver down. You log into the server. You check the httpd.conf. You see an error. You correct it. You look into the change log. Nothing. You ask your colleagues, who made this change. Nobody. Dang. As always. Classic "Whodunit".

segkp revisited

When you are runing quite a number of zones and running applications with a lot of threads and your system sends you messages like "cannot fork: Resource temporarily unavailable" in all your zones in parallel and you are not running Solaris 11.1, you should do the following checks. The following checks are for a system without any changes in this regard of segkp in the /etc/system. The numbers used in this example are obfuscated by rounding from a real-life example.

Oracle Sun System Analysis (les bonnes pratiques MOS)

Oracle Sun System Analysis (OSSA) est un service web qui propose des rapports détaillés concernant vos systèmes Solaris. Ce service propose deux types de rapports...

Oracle VM Server for SPARC 3.1

- https://blogs.oracle.com/jsavit/entry/oracle_vm_server_for_sparc

- http://www.c0t0d0s0.org/archives/7632-Oracle-VM-for-SPARC-3.1.html

A new release of Oracle VM Server for SPARC has been released with performance improvements and enhanced operational flexibility.

Upgrading to Oracle VM Server for SPARC 3.1 using Solaris 11.1

The previous blog entry described new features in Oracle VM Server for SPARC 3.1, and I commented that it was "really easy" to upgrade. In this blog I'll show the actual steps used to do the upgrade.

HOWTO Interpret, Understand and Resolve Common pkg(1M) Errors on Solaris 11

Solaris 11 has been out on the market for nigh on two years and it's an absolutely brilliant evolution in the history of Solaris, however I've come to notice one common issue that really shouldn't be an issue at all: the number of calls we're getting from people not being able to interpret the failure messages that pkg(1M) produces.

This post aims to explain how to interpret, understand and resolve the most common pkg(1M) errors.

SPARC presentations at Hotchips 25

I think we will see some news in the usual news outlets about upcoming SPARC silicon soon. The agenda of the currently running Hotchips 25 conference has three presentations related to SPARC...

Less known Solaris 11.1 features: A user in 1024 groups and a workaround for a 25 year old problem

For a long time the maximum number of groups a user could belong to was 16, albeit there was a way to get 32. In Solaris 11 and recent versions of Solaris 10, the maximum number of groups a user could belong to is 1024 (which is the same limit Windows sets in this regard). It's easy to set the new limit.

Oracle Virtual Compute Appliance

There has been major news on the Oracle virtualization front with the announcement of the Oracle Virtual Compute Appliance ("OVCA" for short). This is an Oracle engineered system designed for virtualization, joining Oracle's family of engineered systems such as Exadata, Exalogic, and Oracle SuperCluster T5-8.

OVCA is is intended for general purpose use for a wide range of applications in virtual machines rather than being optimized for a specific workload. It is especially designed for quick deployment into production and ease of use. Customers can start up virtual machines about an hour after OVCA installation. That provides faster "time to value" than taking general purpose systems and designing, adding, and configuring the network, storage and VM software needed to be useful.

An important milestone

Yesterday, with very little fanfare, illumos passed an important milestone.

So, what makes Solaris Zones so cool?

How do you virtualize? Do you emulate virtual machines? Do you partition your servers' hardware? Or do you run a container technology?

This post is about the third option, a container technology built right into Solaris: Solaris Zones. They are pretty awesome, especially on Solaris 11 - they're like vacation: once you go Zones, you won't want to leave them :) But what exactly makes Zones so cool?

Less^H^H^H^HUnknown features of Solaris 11: Virtual Consoles

Next time you are working on the console of your system (perhaps just the console you see in Virtualbox), just try pressing the alt-Key and the cursor left or cursor right key in parallel respectively Alt+F1 for the first, the system console. Alt+F2 for the second console ... and so on. Nothing innovative, especially as Solaris used to have them in the past (but lost them out of reasons unknown to me) ... more a feature from the "Damned! At last!"-department, but still really useful.

WP: SPARC M5 Domaining Best Practices Whitepaper

To learn more about "Domaining" take a look at the recent (August 20th) announced whitepaper: SPARC M5-32 Domaining Best Practices.

The SPARC M5-32 server provides three distinct forms of virtualization:

- Dynamic Domains

- Oracle VM Server for SPARC

- Oracle Solaris Zones

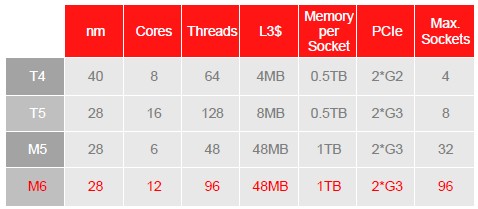

Oracle revs up Sparc M6 chip for seriously big iron

Bixby interconnect scales to 96 sockets

Hot Chips The new Sparc M6 processor, unveiled at the Hot Chips conference at Stanford University this week, makes a bold statement about Oracle's intent to invest in very serious big iron and go after Big Blue in a big way.

Oracle's move is something that the systems market desperately needs, particularly for customers where large main memory is absolutely necessary. And – good news for Ellison & CO. – with every passing day, big memory computing is getting to be more and more important, just like in the glory days of the RISC/Unix business.

Oracle software will run faster with new SPARC M6 chip

Oracle's database and high-performance workloads will run faster with the company's latest SPARC M6 chip, which has been tuned specially for the company's applications.

The latest SPARC processor has 12 processor cores, effectively doubling the number of cores than its predecessor, M5, which shipped earlier this year. Each M6 core will be able to run 8 threads simultaneously, giving the chip the ability to run 96 threads simultaneously, said Ali Vahidsafa , senior hardware engineer at Oracle, during a presentation about M6 at the Hot Chips conference in Stanford, California.